Here’s the uncomfortable truth:

I’ve never met an experienced marketer who genuinely believes last-click numbers reflect reality. And yet 78% of advertisers still rely on last-click attribution.

So what’s going on?

It’s not intelligence. It’s not sophistication.

It’s culture.

Last-click persists because it is easy and it is available.

Those numbers are staring at your teams every hour of every day. Every dashboard. Every optimisation meeting. Every budget reallocation.

Meanwhile, every better measurement method; uplift tests, MMM, MTA, incrementality, geo-experiments, sits somewhere far less accessible. Quarterly decks. Complex documentation. A separate dashboard no one checks.

If you think you’re fighting a measurement problem, you’re not.

You’re fighting a workflow problem, a habit problem, and ultimately a culture problem.

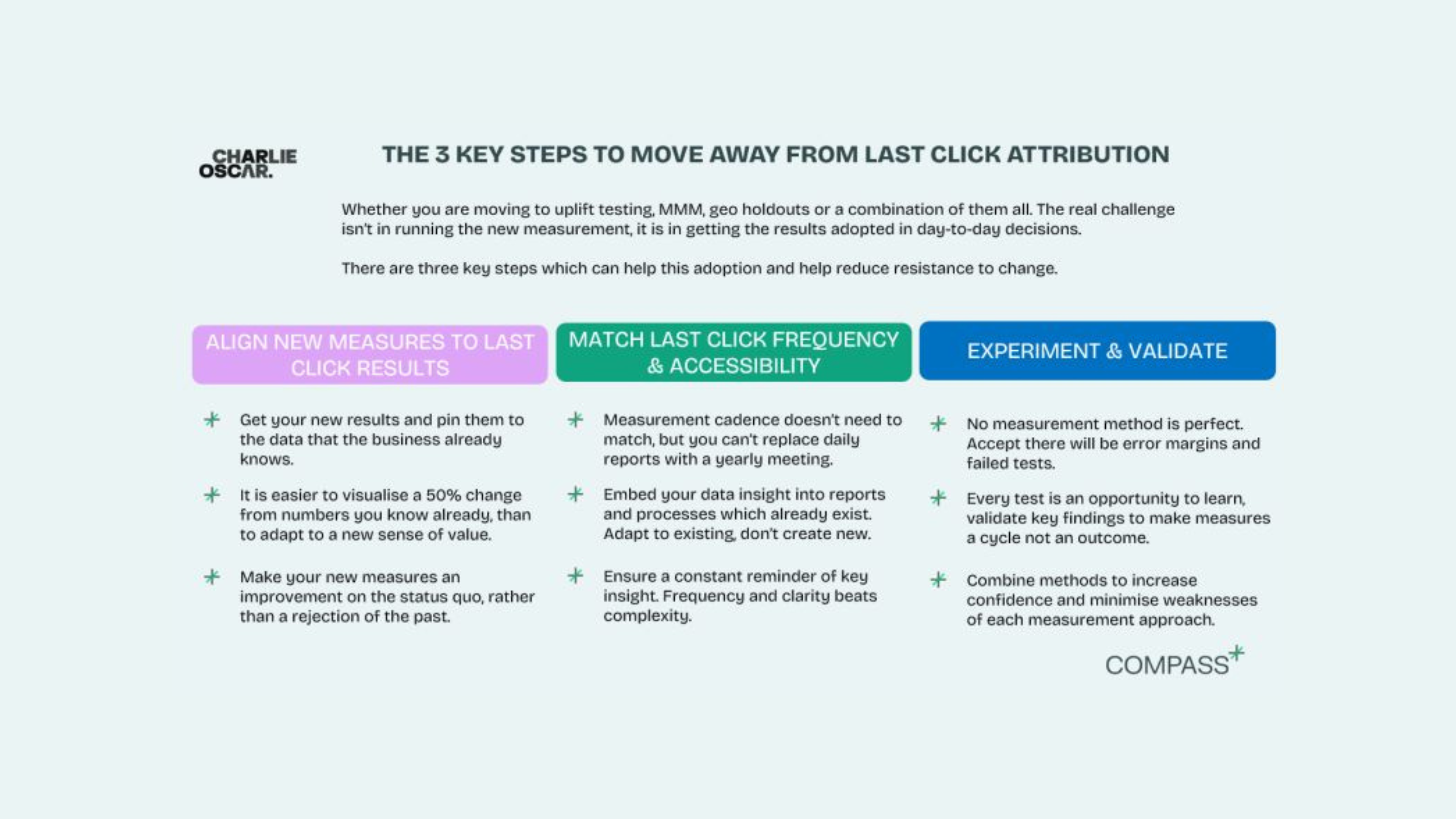

Over the last few years, here are the three things we learned actually move teams away from last-click, without triggering organisational revolt.

1. Anchor new measurement back to the numbers people already trust

If you drop entirely new metrics on a team that has been staring at last-click ROAS for five years, you will lose them in five minutes.

What works instead?

Run your experiments (MMM, holdouts, A/B tests), then map the results back to last-click.

That way:

-

People can compare like for like

-

New measurement becomes tangible instead of theoretical

-

Teams don’t have to rebuild their mental models overnight

If your uplift test shows channel X actually performs at 2× its last-click ROAS, then every time the team sees a 1.3 ROAS, they automatically think: “Okay, the real value is closer to 2.6.”

The number on the screen doesn’t change, but the interpretation does.

This is how you shift culture without breaking workflows.

2. Make new measurement as frequent and accessible as last-click

You cannot rebuild measurement culture if:

-

You check last-click every day

-

And incrementality only once a quarter

That math simply won’t work.

You may not be able to run experiments daily, but you can embed experiment results into the data people already look at.

That means:

-

No new dashboards

-

No new tabs

-

No new tools to remember to open

Instead, bake the adjusted values into the workflow your team already uses.

Make it impossible to ignore the “true” value. Turn it into ambient awareness, not a separate step.

When new measurement shows up in the same place as last-click, every day, cultural gravity starts to shift.

3. Validate, experiment, and confront the uncomfortable edges

Every measurement method has flaws.

Every method will contradict another method at some point.

This is where most teams freeze.

Instead of panicking, run controlled tests to validate the discrepancies:

-

If the new model wins → confidence goes up

-

If the new model struggles → you’ve found a blind spot you can adjust for

The most credible experts in any field don’t pretend their method is perfect.

They understand its weaknesses — and compensate for them.

Adopting better measurement is not about certainty.

It’s about confidence-weighted decision-making.

The Truth: Most brands aren’t using last-click because they believe in it

They use it because it’s the default mode their culture operates in.

Changing attribution isn’t about teaching people new maths — most marketers already understand last-click is flawed.

It’s about rewiring years of habits, processes, and organisational muscle memory.

The three approaches above won’t fix everything, but they will get you moving:

-

Anchor new measurement to familiar numbers

-

Make incrementality as visible as last-click

-

Validate and stress-test your results

Shift the workflow, and the culture follows.Shift the culture, and the measurement finally changes.